Vector embeddings, semantic caching, and an interactive demo

In this article, I'll go over what vector embeddings are, how to create and compare them, how they're used to implement RAG (Retrieval-Augmented Generation) systems, and the role that a semantic cache plays.

Feel free to skip the content below and go right to the demo where I provide an interactive visualization of how toggling a semantic cache's similarity threshold can affect response quality.

What is a vector embedding

In the context of LLMs, a vector embedding is, in the dumbest sense, a list of numbers that represent a particular text's semantic structure. The idea is to take some text like, "hat" as an input and output an array of numbers that encode things like meaning, grammar structure, tone, etc.

Let's use OpenAI to generate an embedding:

const { data: [{ embedding }], } = await openai.embeddings.create({ model: "text-embedding-3-small", input: "hat", encoding_format: "float", });

If we console.log the embedding variable in the code above, we should see something like this in our terminal.

[ -0.012111276, 0.0015233769, -0.022680242, 0.05219072, 0.016249353, -0.04161487, -0.051226776, 0.068908274, 0.016634932, -0.026494708, -0.0109407725, 0.01728215, -0.009536168, -0.029028507, 0.01101651, 0.011181759, -0.0023874845, 0.0060349824, -0.002134449, 0.024842232, 0.0069300737, 0.021812692, 0.013763754, -0.004812838, 0.012600134, 0.05849767, -0.0073810625, -0.024098618, 0.05213564, -0.01681395, 0.00033802615, -0.045801144, 0.063124605, -0.061141636, 0.039549273, 0.03687777, -0.010362405, 0.016029023, -0.012276525, -0.00041505566, 0.0031173283, -0.031424597, 0.023657957, 0.030901313, 0.026384544, -0.0040416825, -0.020683499, 0.0014097692, 0.020063821, 0.031231808, 0.007787296, 0.009942401, 0.026770122, 0.115232706, 0.007725328, -0.020118903, -0.00071521255, 0.04640705, 0.032278378, -0.025337975, -0.035445623, 0.006492856, 0.0061141634, -0.0043136524, -0.0241537, -0.05285171, -0.012909974, -0.0059661292, -0.05932391, 0.031314433, -0.011154218, 0.022280892, -0.003893648, -0.0054187463, 0.0142526105, -0.014638189, -0.038502704, 0.0107066715, 0.050180208, -0.031617384, 0.0026749466, 0.008468943, -0.02627438, -0.028367516, -0.02375435, -0.03968698, -0.08758126, 0.017392317, -0.026536021, -0.041366998, 0.052906793, 0.06709055, 0.055495672, -0.005742356, 0.022817949, -0.024208782, -0.043680467, -0.013805065, 0.004217258, -0.018728068, ... 1436 more items ]

That's a lot of encoded information.

What makes the use of vectors so powerful is that we can write straightforward code to compare how similar one vector is to another. Let's write some code to show how we can do that:

const getVectorEmbedding = async (input: string) => { const { data: [{ embedding }], } = await openai.embeddings.create({ model: "text-embedding-3-small", input, encoding_format: "float", }); return embedding; }; // Should return a float between 0 and 1 const getCosineSimilarity = (A: number[], B: number[]) => { let dotproduct = 0; let mA = 0; let mB = 0; for (let i = 0; i < A.length; i++) { dotproduct += A[i] * B[i]; mA += A[i] * A[i]; mB += B[i] * B[i]; } mA = Math.sqrt(mA); mB = Math.sqrt(mB); const similarity = dotproduct / (mA * mB); return similarity; }; const similarity = getCosineSimilarity( await getVectorEmbedding("hat"), await getVectorEmbedding("hate") );

The code above uses what's called cosine similarity to create the similarity value at the end. We expect getCosineSimilarity to return a number between 0 and 1, where a number closer to 0 indicates dissimilarity and a number closer to 1 indicates similarity. I intentionally chose to generate embeddings for two words that are textually similar, but semantically quite different. After running the code above, the value of the similarity variable is ~.39. Out of context, this value doesn't mean all that much.

Let's compare it to the cosine similarity of the words, "hat" and "cap". Since "cap" is a possible synonym of "hat", we expect a cosine similarity greater than ~.39:

const hatCapSimilarity = getCosineSimilarity( await getVectorEmbedding("hat"), await getVectorEmbedding("cap") );

And we were right. The value of hatCapSimilarity in the above code is ~.46.

So sure enough, the vector embeddings produced via OpenAI's API contained enough semantic information for our computer to know that "cap" is more similar to "hat".

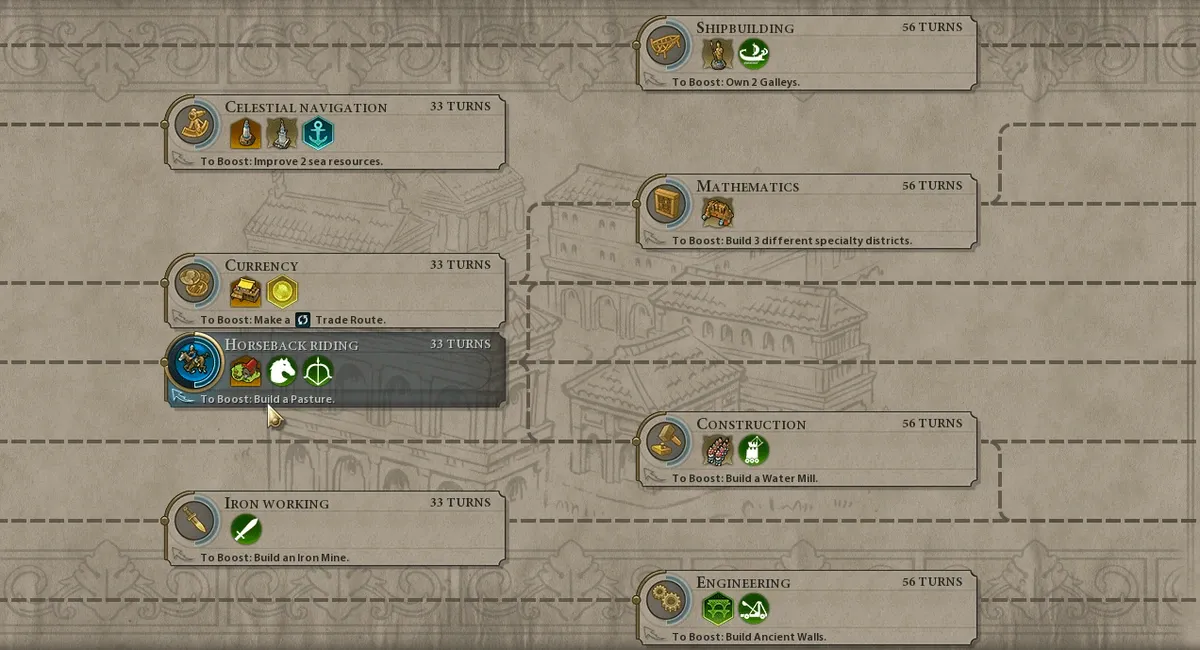

All of this leads to an important realization: vector embeddings unlock programmatic semantic comparisons. I played alot of Civilization growing up and learning about vector embeddings felt similar to the unlocking a new branch in my personal technology tree.

Retrieval-Augmented Generation

The most common usage of vector embeddings that I see is called RAG, or Retrieval-Augmented Generation, and it typically looks something like this:

-

Break up a large dataset into small chunks, generate vector embeddings for each chunk, and then store the vectors along with their associated text chunks in a database.

-

Write an http endpoint that converts a user query into a vector embedding and compares the user's query against all the previous stored chunks.

-

If some of the previously stored chunks are similar, pull them out of the DB and insert them into a completion prompt along with the user's request likeso:

{users_request} --- {chunk_one} {chunk_two} ... ... -

Send the final prompt to an LLM and stream the response back to your user.

I recommend watching Greg Richardson's Youtube video where he walks through the creation of ClippyGPT, a Supabase documentation assistant that relies on a RAG implementation to answer developer questions about Supabase's software.

The portion of the RAG implementation above that I want to focus on for the remainder of this article is steps two and three. In these steps, we compare the vector embedding of a user's request to the vector embeddings of the data chunks stored in our vector database, and if a vector for some data chunk is similar enough to the vector of the user's query, the data chunk is retrieved. This process has a name: semantic caching.

Semantic cache - a building block for RAG

In a semantic cache, just like in any other cache, a cache hit happens when a lookup finds a "match" in the cache's DB. A "match" might allow a system to re-use previously computed data and avoid doing expensive work, or, like in Greg Richardson's tutorial, maybe it informs the system that it should append "matching" cached content to some prompt completion request. But the definition of "match" in a semantic cache bares little resemblance to a "match" in a typical, non-semantic cache.

In a typical cache, a "match" occurs when a lookup finds an exact match - the string of the lookup key exactly matches the string of an existing DB key. Whereas in a semantic cache, a "match" occurs when a lookup finds a semantically similar key in the database - textual precision doesn't matter. Semantic caches have many use cases, some of which have nothing to do with AI, but for the remainder of this article, let's focus on how we can use them to avoid expensive work, specifically prompt completion requests.

Example fitness routine AI

Semantic caches rely on vector embeddings to determine whether or not a key is similar enough to an existing key in the cache DB. Let's look at two example keys, where each key is a request to an AI fitness planner service:

key #1: "Create a fitness routine to help me build muscle." key #2: "Create a fitness routine to help me gain muscle."

If the first key already exists in a semantic cache, should we consider the second key a "match"? The answer depends on the cache's similarity threshold.

As its name suggests, a cache's similarity threshold is the minimum required semantic similarity between an existing key and the requested key required to consider the keys a "match".

So back to our two keys in the example above. Let's calculate their cosine similarity:

key #1: "Create a fitness routine to help me build muscle." key #2: "Create a fitness routine to help me gain muscle." COSINE SIMILARITY: ~.96

As expected, the similarity value for these two sentences is close to 1. Now let's change the second key by appending a single word to it and then recalculate the new cosine similarity:

key #1: "Create a fitness routine to help me build muscle." key #2: "Create a fitness routine to help me gain muscle fast." COSINE SIMILARITY: ~.89

Ok, so the addition of the word "fast" at the end of the second key caused the cosine similarity to decrease. If these requests occurred in a system that uses a semantic cache and the cache's similarity threshold is set to .9, only the first example of the two keys, the one without the word "fast", would result in a cache hit.

And maybe this makes sense to fitness enthusiasts. Maybe building muscle quickly and building muscle sustainably require significantly different strategies.

Determining the value of a semantic cache's similarity threshold greatly depends on the application where it's used. For example, an LLM documentation assistant might not require a high similarity threshold, since its users are savvy enough to parse out irrelevant responses on their own, whereas an LLM medical assistant might need a high similarity threshold, due to the negative consequences of misinformation.

Furthermore, for some applications, a single similarity threshold for all types of requests might not work at all. We might need to use something like OpenAI functions to first determine which category a request falls under, and then adjust the semantic similarity threshold we impose on the request based on its category.

A demo to put it all together

I present a demo to better visualize the relationship between the likelihood of a cache hit and the value of a semantic threshold. The visualization uses UMAP to visualize semantic clustering.

Conclusion

That's all folks. I focus on React/NextJS but feel free to email me at [email protected] with any other questions or inquiries.